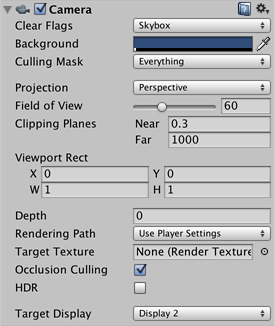

Camera

Switch to ScriptingCameras are the devices that capture and display the world to the player. By customizing and manipulating cameras, you can make the presentation of your game truly unique. You can have an unlimited number of cameras in a scene. They can be set to render in any order, at any place on the screen, or only certain parts of the screen.

Properties

| Property: | Function: |

|---|---|

| Clear Flags | Determines which parts of the screen will be cleared. This is handy when using multiple Cameras to draw different game elements. |

| Background | Color applied to the remaining screen after all elements in view have been drawn and there is no skybox. |

| Culling Mask | Include or omit layers of objects to be rendered by the Camera. Assign layers to your objects in the Inspector. |

| Projection | Toggles the camera’s capability to simulate perspective. |

| Perspective | Camera will render objects with perspective intact. |

| Orthographic | Camera will render objects uniformly, with no sense of perspective. NOTE: Deferred rendering is not supported in Orthographic mode. Forward rendering is always used. |

| Size (when Orthographic is selected) | The viewport size of the Camera when set to Orthographic. |

| Field of view (when Perspective is selected) | Width of the Camera’s view angle, measured in degrees along the local Y axis. |

| Clipping Planes | Distances from the camera to start and stop rendering. |

| Near | The closest point relative to the camera that drawing will occur. |

| Far | The furthest point relative to the camera that drawing will occur. |

| Normalized View Port Rect | Four values that indicate where on the screen this camera view will be drawn, in Screen Coordinates (values 0–1). |

| X | The beginning horizontal position that the camera view will be drawn. |

| Y | The beginning vertical position that the camera view will be drawn. |

| W (Width) | Width of the camera output on the screen. |

| H (Height) | Height of the camera output on the screen. |

| Depth | The camera’s position in the draw order. Cameras with a larger value will be drawn on top of cameras with a smaller value. |

| Rendering Path | Options for defining what rendering methods will be used by the camera. |

| Use Player Settings | This camera will use whichever Rendering Path is set in the Player Settings. |

| Vertex Lit | All objects rendered by this camera will be rendered as Vertex-Lit objects. |

| Forward | All objects will be rendered with one pass per material. |

| Deferred Lighting | All objects will be drawn once without lighting, then lighting of all objects will be rendered together at the end of the render queue. NOTE: If the camera’s projection mode is set to Orthographic, this value is overridden, and the camera will always use Forward rendering. |

| Target Texture | Reference to a Render Texture that will contain the output of the Camera view. Making this reference will disable this Camera’s capability to render to the screen. |

| HDR | Enables High Dynamic Range rendering for this camera. |

| Target Display | Which external device to render to. Between 1 and 8. |

Details

Cameras are essential for displaying your game to the player. They can be customized, scripted, or parented to achieve just about any kind of effect imaginable. For a puzzle game, you might keep the Camera static for a full view of the puzzle. For a first-person shooter, you would parent the Camera to the player character, and place it at the character’s eye level. For a racing game, you’d likely want to have the Camera follow your player’s vehicle.

You can create multiple Cameras and assign each one to a different Depth. Cameras are drawn from low Depth to high Depth. In other words, a Camera with a Depth of 2 will be drawn on top of a Camera with a depth of 1. You can adjust the values of the Normalized View Port Rectangle property to resize and position the Camera’s view onscreen. This can create multiple mini-views like missile cams, map views, rear-view mirrors, etc.

Render Path

Unity supports different Rendering Paths. You should choose which one you use depending on your game content and target platform / hardware. Different rendering paths have different features and performance characteristics that mostly affect Lights and Shadows. The rendering Path used by your project is chosen in Player Settings. Additionally, you can override it for each Camera.

For more info on rendering paths, check the rendering paths page.

Clear Flags

Each Camera stores color and depth information when it renders its view. The portions of the screen that are not drawn in are empty, and will display the skybox by default. When you are using multiple Cameras, each one stores its own color and depth information in buffers, accumulating more data as each Camera renders. As any particular Camera in your scene renders its view, you can set the Clear Flags to clear different collections of the buffer information. This is done by choosing one of the four options:

Skybox

This is the default setting. Any empty portions of the screen will display the current Camera’s skybox. If the current Camera has no skybox set, it will default to the skybox chosen in the Lighting Window (menu: ). It will then fall back to the Background Color. Alternatively a Skybox component can be added to the camera. If you want to create a new Skybox, you can use this guide.

Solid Color

Any empty portions of the screen will display the current Camera’s Background Color.

Depth Only

If you wanted to draw a player’s gun without letting it get clipped inside the environment, you would set one Camera at Depth 0 to draw the environment, and another Camera at Depth 1 to draw the weapon alone. The weapon Camera’s Clear Flags should be set to to depth only. This will keep the graphical display of the environment on the screen, but discard all information about where each object exists in 3-D space. When the gun is drawn, the opaque parts will completely cover anything drawn, regardless of how close the gun is to the wall.

Don’t Clear

This mode does not clear either the color or the depth buffer. The result is that each frame is drawn over the next, resulting in a smear-looking effect. This isn’t typically used in games, and would likely be best used with a custom shader.

Clip Planes

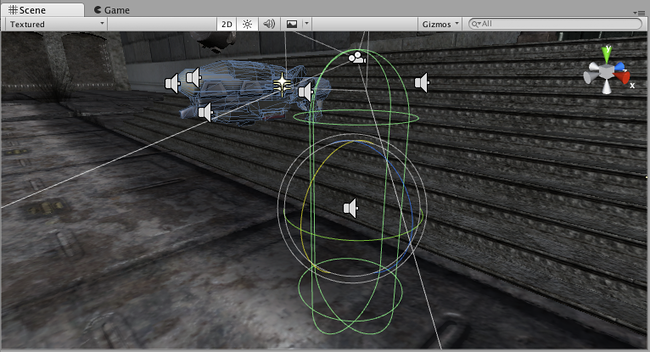

The Near and Far Clip Plane properties determine where the Camera’s view begins and ends. The planes are laid out perpendicular to the Camera’s direction and are measured from the its position. The Near plane is the closest location that will be rendered, and the Far plane is the furthest.

The clipping planes also determine how depth buffer precision is distributed over the scene. In general, to get better precision you should move the Near plane as far as possible.

Note that the near and far clip planes together with the planes defined by the field of view of the camera describe what is popularly known as the camera frustum. Unity ensures that when rendering your objects those which are completely outside of this frustum are not displayed. This is called Frustum Culling. Frustum Culling happens irrespective of whether you use Occlusion Culling in your game.

For performance reasons, you might want to cull small objects earlier. For example, small rocks and debris could be made invisible at much smaller distance than large buildings. To do that, put small objects into a separate layer and setup per-layer cull distances using Camera.layerCullDistances script function.

Culling Mask

The Culling Mask is used for selectively rendering groups of objects using Layers. More information on using layers can be found here.

Commonly, it is good practice to put your User Interface on a different layer, then render it by itself with a separate Camera set to render the UI layer by itself.

In order for the UI to display on top of the other Camera views, you’ll also need to set the Clear Flags to Depth only and make sure that the UI Camera’s Depth is higher than the other Cameras.

Normalized Viewport Rectangle

Normalized Viewport Rectangles are specifically for defining a certain portion of the screen that the current camera view will be drawn upon. You can put a map view in the lower-right hand corner of the screen, or a missile-tip view in the upper-left corner. With a bit of design work, you can use Viewport Rectangle to create some unique behaviors.

It’s easy to create a two-player split screen effect using Normalized Viewport Rectangle. After you have created your two cameras, change both camera H value to be 0.5 then set player one’s Y value to 0.5, and player two’s Y value to 0. This will make player one’s camera display from halfway up the screen to the top, and player two’s camera will start at the bottom and stop halfway up the screen.

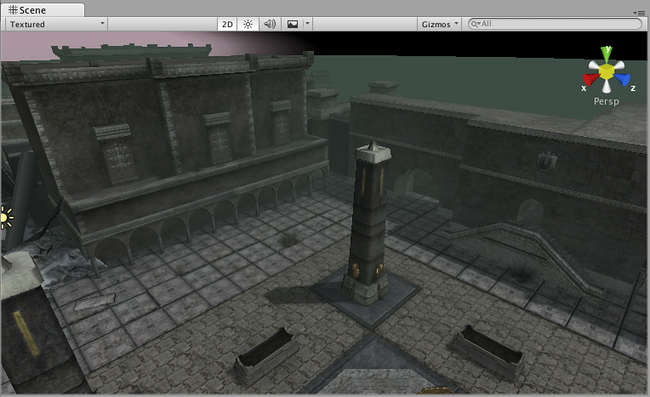

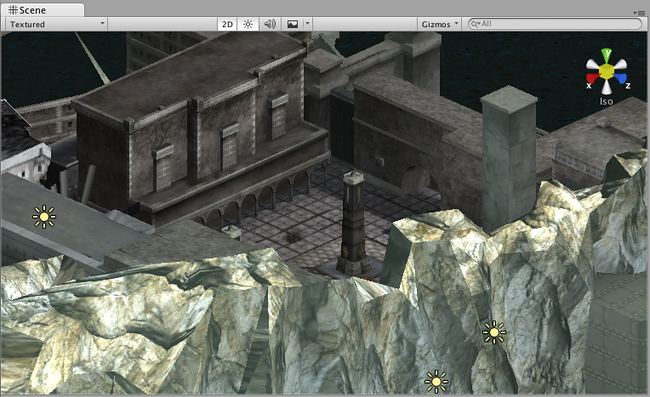

Orthographic

Marking a Camera as Orthographic removes all perspective from the Camera’s view. This is mostly useful for making isometric or 2D games.

Note that fog is rendered uniformly in orthographic camera mode and may therefore not appear as expected. This is because the Z coordinate of the post-perspective space is used for the fog “depth”. This is not strictly accurate for an orthographic camera but it is used for its performance benefits during rendering.

Render Texture

It will place the camera’s view onto a Texture that can then be applied to another object. This makes it easy to create sports arena video monitors, surveillance cameras, reflections etc.

Target Display

A camera has up to 8 target display settings. The camera can be controlled to render to one of up to 8 monitors. This is supported only on PC, Mac and Linux. In Game View the chosen display in the Camera Inspector will be shown.

Hints

- Cameras can be instantiated, parented, and scripted just like any other GameObject.

- To increase the sense of speed in a racing game, use a high Field of View.

- Cameras can be used in physics simulation if you add a Rigidbody Component.

- There is no limit to the number of Cameras you can have in your scenes.

- Orthographic cameras are great for making 3D user interfaces

- If you are experiencing depth artifacts (surfaces close to each other flickering), try setting Near Plane to as large as possible.

- Cameras cannot render to the Game Screen and a Render Texture at the same time, only one or the other.

- There’s an option of rendering a Camera’s view to a texture, called Render-to-Texture, for even more unique effects.

- Unity comes with pre-installed Camera scripts, found in . Experiment with them to get a taste of what’s possible.